Introduction

Large Language Models (LLMs) like ChatGPT, Claude, Gemini, and Llama are built on a single breakthrough idea: the Transformer.

This article explains, step by step, how transformers work, how they predict text, and how companies like OpenAI train them at scale.

Simple language. Real examples.

1. From Text to Tokens

LLMs don’t read letters. They read tokens — small pieces of text.

Each token can be a word, part of a word, or punctuation.

Example:"I ate an apple" → ["I", "ate", "an", "apple"]

Each token is then converted into a vector — a list of numbers that represents its meaning in math form.

Example:

- “I” → [0.1, −0.4, 0.9, …]

- “ate” → [0.3, 0.2, −0.7, …]

- “apple” → [0.2, −0.7, 1.1, …]

These numbers are called embeddings.

2. Self-Attention: Understanding Context

Words mean different things in different contexts.

Self-attention helps the model figure that out.

Example:

- “I ate an apple.” → “apple” = fruit

- “Apple released the iPhone.” → “Apple” = company

Self-attention lets every word look at its neighbors and decide which ones matter most.

The word “apple” in the first sentence attends strongly to “ate,” while in the second it attends to “iPhone.”

That’s how the model captures meaning from context.

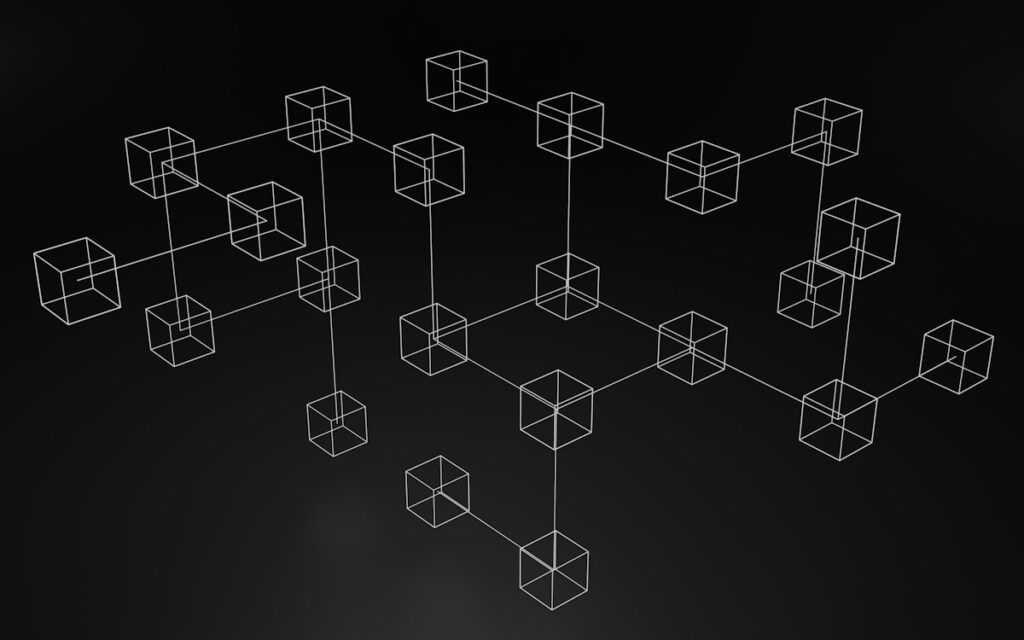

3. Multi-Head Attention

Instead of one single focus, transformers use many attention heads.

Each head looks at a different type of relationship.

Example sentence: “The cat sat on the mat.”

- Head 1: connects “cat” ↔ “sat”

- Head 2: connects “sat” ↔ “mat”

- Head 3: connects “the” ↔ “cat”

Multiple heads let the model learn grammar, topic, and relationships — all at once.

4. Transformer Layers

Each layer has two main parts:

- Self-Attention — collects information from other tokens.

- Feed-Forward Network (MLP) — refines and combines that information.

Dozens of these layers stack up, and each one sharpens meaning further.

By the final layers, “apple” is clearly fruit or company based on context.

5. The Last Token Rule

To predict the next word, the model uses the hidden vector of the last token.

Example:

Sentence: “I ate an apple”

→ Use “apple”’s final vector to predict what comes next.

If the next likely token is “pie,” the model continues the text as:

“I ate an apple pie.”

6. From Hidden States to Predictions

That last hidden vector is multiplied by a giant output matrix — one row per word in the vocabulary.

The result is a list of scores called logits.

Example (simplified):

- “pie” → 7.5

- “computer” → 2.1

- “tree” → 1.5

- “dog” → 0.3

Then we apply softmax to turn them into probabilities:

- “pie” → 70%

- “computer” → 20%

- “tree” → 9%

- “dog” → 1%

The model picks “pie” as the most probable next token.

7. Sampling: Controlling Style and Creativity

Generation is not always deterministic.

Settings like temperature, top-k, and top-p decide how random or creative outputs are.

- Temperature:

- Low (0.2) → focused, factual.

- High (1.5) → creative, varied.

- Top-k: Keep top k options (e.g., top 5).

- Top-p (nucleus): Keep smallest group covering p% probability (e.g., 90%).

- Penalties: Reduce repetition of words.

These sampling tricks balance precision and imagination.

8. KV Cache: How LLMs Stay Fast

Without optimization, every new word would require recalculating all previous tokens.

That’s too slow.

Instead, LLMs use a Key-Value Cache (KV Cache):

- Each token’s keys and values are stored once.

- When generating new tokens, the model reuses cached ones.

- Old tokens don’t look forward; only new tokens look back.

This makes transformers causal (past → future) and efficient for long texts.

9. Inside the Math: Softmax in Two Places

Softmax is used in two key steps:

- Inside Attention

Turns Query-Key scores into focus weights — deciding how tokens talk to each other. - At the Output Layer

Turns logits into probabilities — deciding what word comes next.

Different purposes, same math.

10. Feed-Forward Networks (MLP)

After attention gathers context, the MLP inside each transformer layer does the reasoning.

It mixes and transforms the vector in non-linear ways — enabling abstract understanding like cause, logic, or tone.

11. How LLMs Are Trained

Training is where the “magic” numbers come from.

Process:

- Collect massive text datasets.

- Feed sentences into the model.

- Predict the next token.

- Compare prediction vs actual → compute loss.

- Adjust billions of weights using backpropagation.

- Repeat billions of times.

Over time, the weights capture grammar, facts, reasoning, and associations.

12. RLHF and Alignment

After base training, models learn to behave better using Reinforcement Learning from Human Feedback (RLHF):

- Humans rate model outputs.

- A reward model learns their preferences.

- The main model updates to favor helpful, safe, high-quality answers.

Modern models also use AI feedback, constitutions, and reflection steps for more refined behavior.

13. Efficiency Tricks at Scale

Companies like OpenAI, Anthropic, and Google use many optimizations:

- Flash Attention — faster and memory-efficient attention math.

- MoE (Mixture of Experts) — multiple specialized MLPs, only some active per token.

- Quantization — smaller numbers, faster inference.

- Parallelization — training split across many GPUs and servers.

These make trillion-parameter models practical.

14. Vision LLMs

Newer models can process both text and images.

They split images into small patches, convert each patch to a vector, and feed them into the same transformer as text.

Text tokens and image patches share one space.

That’s how the model can answer “What is in this picture?” or “Describe this chart.”

15. Quick Recap

- Text → Tokens → Vectors

- Self-Attention finds context

- Multi-Head Attention sees multiple relations

- Feed-Forward refines meaning

- Last token predicts next token

- Softmax converts scores to probabilities

- Sampling adds creativity

- KV cache speeds generation

- Training adjusts billions of weights

- RLHF and alignment make models useful and safe

16. Why It Matters

LLMs don’t memorize answers.

They learn relationships between tokens in context.

That’s why they can write, translate, reason, and code — not just recall text.

Every message you see from ChatGPT or Claude is the result of thousands of attention layers working in sequence, one token at a time.

Final Thoughts

Understanding transformers helps demystify AI.

They are not conscious or magical — just math optimized for predicting text with context awareness.

But through scale and clever engineering, this math produces language that feels human.